Chapter 3 Frequency Modeling

Chapter Preview. A primary focus for insurers is estimating the magnitude of aggregate claims it must bear under its insurance contracts. Aggregate claimsThe sum of all claims observed in a period of time are affected by both the frequency and the severity of the insured event. Decomposing aggregate claims into these two components, each of which warrant significant attention, is essential for analysis and pricing. This chapter discusses frequency distributions, summary measures, and parameter estimation techniques.

In Section 3.1, we present terminology and discuss reasons why we study frequency and severity separately. The foundations of frequency distributions and measures are presented in Section 3.2 along with three principal distributions: the binomial, the Poisson, and the negative binomial. These three distributions are members of what is known as the \((a,b,0)\) class of distributions, a distinguishing, identifying feature which allows for efficient calculation of probabilities, further discussed in Section 3.3. When fitting a dataset with a distribution, parameter values need to be estimated and in Section 3.4, the procedure for maximum likelihood estimation is explained.

For insurance datasets, the observation at zero denotes no occurrence of a particular event; this often deserves additional attention. As explained further in Section 3.5, for some datasets it may be impossible to have zero of the studied event or zero events may follow a different model than other event counts. In either case, direct fitting of typical count models could lead to improper estimates. Zero truncation or modification techniques allow for more appropriate distribution fit.

Noting that our insurance portfolio could consist of different sub-groups, each with its own set of individual characteristics, Section 3.6 introduces mixture distributions and methodology to allow for this heterogeneity within a portfolio. In Section 3.7 an example is given that demonstrates how standard frequency distributions can often provide a good fit to real data. Exercises are presented in Section 3.8 and Section 3.9.1 concludes the chapter with R Code for plots depicted in Section 3.4.

3.1 Frequency Distributions

In this section, you learn how to summarize the importance of frequency modeling in terms of

- contractual,

- behavioral,

- database, and

- regulatory/administrative motivations.

3.1.1 How Frequency Augments Severity Information

Basic Terminology

In this chapter, loss, also referred to as ground-up loss, denotes the amount of financial loss suffered by the insured. We use claim to denote the indemnification upon the occurrence of an insured event, thus the amount paid by the insurer. While some texts use loss and claim interchangeably, we wish to make a distinction here to recognize how insurance contractual provisions, such as deductibles and limits, affect the size of the claim stemming from a loss. FrequencyCount random variables that represent the number of claims represents how often an insured event occurs, typically within a policy contract. Here, we focus on count random variables that represent the number of claims, that is, how frequently an event occurs. SeverityThe amount, or size, of each payment for an insured event denotes the amount, or size, of each payment for an insured event. In Chapter 7, the aggregate model, which combines frequency models with severity models, is examined.

The Importance of Frequency

Recall from Section 1.2 that setting the price of an insurance good can be a complex problem. In manufacturing, the cost of a good is (relatively) known. In other financial service areas, market prices are available. In insurance, we can generalize the price setting as follows. Start with an expected cost, then add “margins” to account for the product’s riskiness, expenses incurred in servicing the product, and a profit/surplus allowance for the insurer.

The expected cost for insurance can be determined as the expected number of claims times the amount per claim, that is, expected value of frequency times severity. The focus on claim count allows the insurer to consider those factors which directly affect the occurrence of a loss, thereby potentially generating a claim.

Why Examine Frequency Information?

Insurers and other stakeholders, including governmental organizations, have various motivations for gathering and maintaining frequency datasets.

Contractual. In insurance contracts, it is common for particular deductibles and policy limits to be listed and invoked for each occurrence of an insured event. Correspondingly, the claim count data generated would indicate the number of claims which meet these criteria, offering a unique claim frequency measure. Extending this, models of total insured losses would need to account for deductibles and policy limits for each insured event.

Behavioral. In considering factors that influence loss frequency, the risk-taking and risk-reducing behavior of individuals and companies should be considered. Explanatory (rating) variables can have different effects on models of how often an event occurs in contrast to the size of the event.

- In healthcare, the decision to utilize healthcare by individuals, and minimize such healthcare utilization through preventive care and wellness measures, is related primarily to his or her personal characteristics. The cost per user is determined by the patient’s medical condition, potential treatment measures, and decisions made by the healthcare provider (such as the physician) and the patient. While there is overlap in those factors and how they affect total healthcare costs, attention can be focused on those separate drivers of healthcare visit frequency and healthcare cost severity.

- In personal lines, prior claims history is an important underwriting factor. It is common to use an indicator of whether or not the insured had a claim within a certain time period prior to the contract. Also, the number of claims incurred by the insured in previous periods has predictive power.

- In homeowners insurance, in modeling potential loss frequency, the insurer could consider loss prevention measures that the homeowner has adopted, such as visible security systems. Separately, when modeling loss severity, the insurer would examine those factors that affect repair and replacement costs.

Databases. Insurers may hold separate data files that suggest developing separate frequency and severity models. For example, a policyholder file is established when a policy is written. This file records much underwriting information about the insured(s), such as age, gender, and prior claims experience, policy information such as coverage, deductibles and limitations, as well as any insurance claims event. A separate file, known as the “claims” file, records details of the claim against the insurer, including the amount. (There may also be a “payments” file that records the timing of the payments although we shall not deal with that here.) This recording process could then extend to insurers modeling the frequency and severity as separate processes.

Regulatory and Administrative. Insurance is a highly regulated and monitored industry, given its importance in providing financial security to individuals and companies facing risk. As part of their duties, regulators routinely require the reporting of claims numbers as well as amounts. This may be due to the fact that there can be alternative definitions of an “amount,” e.g., paid versus incurred, and there is less potential error when reporting claim numbers. This continual monitoring helps ensure financial stability of these insurance companies.

Show Quiz Solution

3.2 Basic Frequency Distributions

In this section, you learn how to:

- Determine quantities that summarize a distribution such as the distribution and survival function, as well as moments such as the mean and variance

- Define and compute the moment and probability generating functions

- Describe and understand relationships among three important frequency distributions: the binomial, Poisson, and negative binomial distributions

In this section, we introduce the distributions that are commonly used in actuarial practice to model count data. The claim count random variable is denoted by \(N\); by its very nature it assumes only non-negative integer values. Hence the distributions below are all discrete distributions supported on the set of non-negative integers \(\{0, 1, \ldots \}\).

3.2.1 Foundations

Since \(N\) is a discrete random variable taking values in \(\{0, 1, \ldots \}\), the most natural full description of its distribution is through the specification of the probabilities with which it assumes each of the non-negative integer values. This leads us to the concept of the probability mass function (pmf)A function that gives the probability that a discrete random variable is exactly equal to some value of \(N\), denoted as \(p_N(\cdot)\) and defined as follows: \[ p_N(k)=\Pr(N=k), \quad \hbox{for } k=0,1,\ldots \] We note that there are alternate complete descriptions, or characterizations, of the distribution of \(N\); for example, the distribution functionThe chance that the random variable is less than or equal to x, as a function of x of \(N\) defined by \(F_N(x) = \Pr(N \le x)\) and determined as: \[ F_N(x)=\begin{cases} \sum\limits_{k=0}^{\lfloor x \rfloor } \Pr(N=k), &x\geq 0;\\ 0, & \hbox{otherwise}. \end{cases} \] In the above, \(\lfloor \cdot \rfloor\) denotes the floor function; \(\lfloor x \rfloor\) denotes the greatest integer less than or equal to \(x\). This expression also suggests the descriptor cumulative distribution function, a commonly used alternative way of expressing the distribution function. We also note that the survival functionThe probability that the random variable takes on a value greater than a number x of \(N\), denoted by \(S_N(\cdot)\), is defined as the ones’-complement of \(F_N(\cdot)\), i.e. \(S_N(\cdot)=1-F_N(\cdot)\). Clearly, the latter is another characterization of the distribution of \(N\).

Often one is interested in quantifying a certain aspect of the distribution and not in its complete description. This is particularly useful when comparing distributions. A center of location of the distribution is one such aspect, and there are many different measures that are commonly used to quantify it. Of these, the meanAverage is the most popular; the mean of \(N\), denoted by \(\mu_N\),2 is defined as

Another basic aspect of a distribution is its dispersion, and of the various measures of dispersion studied in the literature, the standard deviationThe square-root of variance is the most popular. Towards defining it, we first define the varianceSecond central moment of a random variable x, measuring the expected squared deviation of between the variable and its mean of \(N\), denoted by \(\mathrm{Var}[N]\), as \(\mathrm{Var}[N]=\mathrm{E}{[(N-\mu_N)^2]}\) when \(\mu_N\) is finite. By basic properties of the expected value of a random variable, we see that \(\mathrm{Var}[N]=\mathrm{E}[N^2]-[\mathrm{E}(N)]^2\). The standard deviation of \(N\), denoted by \(\sigma_N\), is defined as the square root of \(\mathrm{Var}[N]\). Note that the latter is well-defined as \(\mathrm{Var}[N]\), by its definition as the average squared deviation from the mean, is non-negative; \(\mathrm{Var}[N]\) is denoted by \(\sigma_N^2\). Note that these two measures take values in \([0,\infty]\).

3.2.2 Moment and Probability Generating Functions

Now we introduce two generating functions that are found to be useful when working with count variables. For a discrete random variable, the moment generating function (mgf)The mgf of random variable n is defined the expectation of exp(tn), as a function of t of \(N\), denoted as \(M_N(\cdot)\), is defined as \[ M_N(t) = \mathrm{E}~{[e^{tN}]} = \sum^{\infty}_{k=0} ~e^{tk}~ p_N(k), \quad t\in \mathbb{R}. \] We note that while \(M_N(\cdot)\) is well defined as it is the expectation of a non-negative random variable (\(e^{tN}\)), it can assume the value \(\infty\). Note that for a count random variable, \(M_N(\cdot)\) is finite valued on \((-\infty,0]\) with \(M_N(0)=1\). The following theorem, whose proof can be found in Billingsley (2008) (pages 285-6), encapsulates the reason for its name.

Theorem 3.1.

Let \(N\) be a count random variable such that \(\mathrm{E}~{[e^{t^\ast N}]}\) is finite for some \(t^\ast>0\). We have the following:

- All moments of \(N\) are finite, i.e. \[ \mathrm{E}{[N^r]}<\infty, \quad r > 0. \]

- The mgf can be used to generate its moments as follows: \[ \left.\frac{{\rm d}^m}{{\rm d}t^m} M_N(t)\right\vert_{t=0}=\mathrm{E}[N^m], \quad m\geq 1. \]

- The mgf \(M_N(\cdot)\) characterizes the distribution; in other words it uniquely specifies the distribution.

Another reason that the mgf is very useful as a tool is that for two independent random variables \(X\) and \(Y\), with their mgfs existing in a neighborhood of \(0\), the mgf of \(X+Y\) is the product of their respective mgfs, that is, \(M_{X+Y}(t) = M_{X}(t)M_{Y}(t)\), for small \(t\).

A related generating function to the mgf is the probability generating function (pgf)For a random variable n, its pgf is defined as the expectation of s^n, as a function of s, and is a useful tool for random variables taking values in the non-negative integers. For a random variable \(N\), by \(P_N(\cdot)\) we denote its pgf and we define it as follows3: \[ P_N(s)=\mathrm{E}~{[s^N]}, \quad s\geq 0. \]

It is straightforward to see that if the mgf \(M_N(\cdot)\) exists on \((-\infty,t^\ast)\) then \[ P_N(s)=M_N(\log(s)), \quad s<e^{t^\ast}. \] Moreover, if the pgf exists on an interval \([0,s^\ast)\) with \(s^\ast>1\), then the mgf \(M_N(\cdot)\) exists on \((-\infty,\log(s^\ast))\), and hence uniquely specifies the distribution of \(N\) by Theorem 3.1. (As a reminder, throughout this text we use log as the natural logarithm, not the base ten (common) logarithm or other version.) The following result for pgf is an analog of Theorem 3.1, and in particular justifies its name.

Theorem 3.2.

Let \(N\) be a count random variable such that \(\mathrm{E}~{(s^{\ast})^N}\) is finite for some \(s^\ast>1\). We have the following:

- All moments of \(N\) are finite, i.e. \[ \mathrm{E}~{N^r}<\infty, \quad r\geq 0. \]

- The \(pmf\) of \(N\) can be derived from the pgf as follows: \[ p_N(m)=\begin{cases} P_N(0), &m=0;\cr &\cr \left(\frac{1}{m!}\right) \left.\frac{{\rm d}^m}{{\rm d}s^m} P_N(s)\right\vert_{s=0}\;, &m\geq 1.\cr \end{cases} \]

- The factorial moments of \(N\) can be derived as follows: \[ \left.\frac{{\rm d}^m}{{\rm d}s^m} P_N(s)\right\vert_{s=1}=\mathrm{E}~{\prod\limits_{i=0}^{m-1} (N-i)}, \quad m\geq 1. \]

- The pgf \(P_N(\cdot)\) characterizes the distribution; in other words it uniquely specifies the distribution.

3.2.3 Important Frequency Distributions

In this sub-section we study three important frequency distributions used in statistics, namely the binomial, the Poisson, and the negative binomial distributions. In the following, a risk denotes a unit covered by insurance. A risk could be an individual, a building, a company, or some other identifier for which insurance coverage is provided. For context, imagine an insurance data set containing the number of claims by risk or stratified in some other manner. The above mentioned distributions also happen to be the most commonly used in insurance practice for reasons, some of which we mention below.

- These distributions can be motivated by natural random experiments which are good approximations to real life processes from which many insurance data arise. Hence, not surprisingly, they together offer a reasonable fit to many insurance data sets of interest. The appropriateness of a particular distribution for the set of data can be determined using standard statistical methodologies, as we discuss later in this chapter.

- They provide a rich enough basis for generating other distributions that even better approximate or well cater to more real situations of interest to us.

- The three distributions are either one-parameter or two-parameter distributions. In fitting to data, a parameter is assigned a particular value. The set of these distributions can be enlarged to their convex hullsThe convex hull of a set of points x is the smallest convex set that contains x by treating the parameter(s) as a random variable (or vector) with its own probability distribution, with this larger set of distributions offering greater flexibility. A simple example that is better addressed by such an enlargement is a portfolio of claims generated by insureds belonging to many different risk classesThe formation of different premiums for the same coverage based on each homogeneous group’s characteristics..

- In insurance data, we may observe either a marginal or inordinate number of zeros, that is, zero claims by risk. When fitting to the data, a frequency distribution in its standard specification often fails to reasonably account for this occurrence. The natural modification of the above three distributions, however, accommodate this phenomenon well towards offering a better fit.

- In insurance we are interested in total claims paid, whose distribution results from compounding the fitted frequency distribution with a severity distribution. These three distributions have properties that make it easy to work with the resulting aggregate severity distribution.

Binomial Distribution

We begin with the binomial distribution which arises from any finite sequence of identical and independent experiments with binary outcomesOutcomes whose unit can take on only two possible states, traditionally labeled as 0 and 1. The most canonical of such experiments is the (biased or unbiased) coin tossing experiment with the outcome being heads or tails. So if \(N\) denotes the number of heads in a sequence of \(m\) independent coin tossing experiments with an identical coin which turns heads up with probability \(q\), then the distribution of \(N\) is called the binomial distributionA random variable has a binomial distribution (with parameters m and q) if it is the number of “successes” in a fixed number m of independent random trials, all of which have the same probability q of resulting in “success.” with parameters \((m,q)\), with \(m\) a positive integer and \(q\in[0,1]\). Note that when \(q=0\) (resp., \(q=1\)) then the distribution is degenerate with \(N=0\) (resp., \(N=m\)) with probability \(1\). Clearly, its support when \(q\in(0,1)\) equals \(\{0,1,\ldots,m\}\) with pmf given by 4

\[ p_k= {m \choose k} q^k (1-q)^{m-k}, \quad k=0,\ldots,m. \] where \[{m \choose k} = \frac{m!}{k!(m-k)!}\]

The reason for its name is that the pmf takes values among the terms that arise from the binomial expansion of \((q +(1-q))^m\). This realization then leads to the the following expression for the pgf of the binomial distribution: \[ \begin{array}{ll} P_N(z) &= \sum_{k=0}^m z^k {m \choose k} q^k (1-q)^{m-k} \\ &= \sum_{k=0}^m {m \choose k} (zq)^k (1-q)^{m-k} \\ &= (qz+(1-q))^m = (1+q(z-1))^m. \end{array} \] Note that the above expression for the pgf confirms the fact that the binomial distribution is the m-convolutionThe addition of m independent random variables of the Bernoulli distribution, which is the binomial distribution with \(m=1\) and pgf \((1+q(z-1))\). By “m-convolution,” we mean that we can write \(N\) as the sum of \(N_1,\ldots,N_m\). Here, \(N_i\) are iidIndependent and identically distributed Bernoulli variates. Also, note that the mgf of the binomial distribution is given by \((1+q(e^t-1))^m\).

The mean and variance of the binomial distribution can be found in a few different ways. To emphasize the key property that it is a \(m\)-convolution of the Bernoulli distribution, we derive below the moments using this property. We begin by observing that the Bernoulli distribution with parameter \(q\) assigns probability of \(q\) and \(1-q\) to \(1\) and \(0\), respectively. So its mean equals \(q\) (\(=0\times (1-q) + 1\times q\)); note that its raw second moment equals its mean as \(N^2=N\) with probability \(1\). Using these two facts we see that the variance equals \(q(1-q)\). Moving on to the binomial distribution with parameters \(m\) and \(q\), using the fact that it is the \(m\)-convolution of the Bernoulli distribution, we write \(N\) as the sum of \(N_1,\ldots,N_m\), where \(N_i\) are iid Bernoulli variates, as above. Now using the moments of Bernoulli and linearity of the expectation, we see that

\[

\mathrm{E}[{N}]=\mathrm{E}\left[{\sum_{i=1}^m N_i}\right] = \sum_{i=1}^m ~\mathrm{E}[N_i] = mq.

\]

Also, using the fact that the variance of the sum of independent random variables is the sum of their variances, we see that

\[

\mathrm{Var}[{N}]=\mathrm{Var}~\left[{\sum_{i=1}^m N_i}\right]=\sum_{i=1}^m \mathrm{Var}[{N_i}] = mq(1-q).

\]

Alternate derivations of the above moments are suggested in the exercises. One important observation, especially from the point of view of applications, is that the mean is greater than the variance unless \(q=0\).

Poisson Distribution

After the binomial distribution, the Poisson distributionA discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant rate and independently of the time since the last event (named after the French polymath Simeon Denis Poisson) is probably the most well known of discrete distributions. This is partly due to the fact that it arises naturally as the distribution of the count of the random occurrences of a type of event in a certain time period, if the rate of occurrences of such events is a constant. It also arises as the asymptotic limit of the binomial distribution with \(m\rightarrow \infty\) and \(mq\rightarrow \lambda\).

The Poisson distribution is parametrized by a single parameter usually denoted by \(\lambda\) which takes values in \((0,\infty)\). Its pmf is given by \[ p_k = \frac{e^{-\lambda}\lambda^k}{k!}, k=0,1,\ldots \] It is easy to check that the above specifies a pmf as the terms are clearly non-negative, and that they sum to one follows from the infinite Taylor series expansion of \(e^\lambda\). More generally, we can derive its pgf, \(P_N(\cdot)\), as follows: \[ P_N(z)= \sum_{k=0}^\infty p_k z^k = \sum_{k=0}^\infty \frac{e^{-\lambda}\lambda^kz^k}{k!} = e^{-\lambda} e^{\lambda z} = e^{\lambda(z-1)}, \forall z\in\mathbb{R}. \] From the above, we derive its mgf as follows: \[ M_N(t)=P_N(e^t)=e^{\lambda(e^t-1)}, t\in \mathbb{R}. \] Towards deriving its mean, we note that for the Poisson distribution \[ kp_k=\begin{cases} 0, &k=0 \cr \lambda~p_{k-1}, &k\geq1 . \end{cases} \] This can be checked easily. In particular, this implies that \[ \mathrm{E}[{N}]=\sum_{k\geq 0} k~p_k =\lambda\sum_{k\geq 1} p_{k-1} = \lambda\sum_{j\geq 0} p_{j} =\lambda. \] In fact, more generally, using either a generalization of the above or using Theorem 3.1, we see that \[ \mathrm{E}{\prod\limits_{i=0}^{m-1} (N-i)}=\left.\frac{{\rm d}^m}{{\rm d}s^m} P_N(s)\right\vert_{s=1}=\lambda^m, \quad m\geq 1. \] This, in particular, implies that \[ \mathrm{Var}[{N}]=\mathrm{E}[{N^2}]-[\mathrm{E}({N})]^2 = \mathrm{E}~[N(N-1)]+\mathrm{E}[N]-(\mathrm{E}[{N]})^2=\lambda^2+\lambda-\lambda^2=\lambda. \] Note that interestingly for the Poisson distribution \(\mathrm{Var}[N]=\mathrm{E}[N]\).

Negative Binomial Distribution

The third important count distribution is the negative binomial distributionThe number of successes until we observe the rth failure in independent repetitions of an experiment with binary outcomes. Recall that the binomial distribution arose as the distribution of the number of successes in \(m\) independent repetitions of an experiment with binary outcomes. If we instead consider the number of successes until we observe the \(r\)-th failure in independent repetitions of an experiment with binary outcomes, then its distribution is a negative binomial distribution. A particular case, when \(r=1\), is the geometric distribution. However when \(r\) in not an integer, the above random experiment would not be applicable. In the following, we allow the parameter \(r\) to be any positive real number to then motivate the distribution more generally. To explain its name, we recall the binomial series, i.e. \[ (1+x)^s= 1 + s x + \frac{s(s-1)}{2!}x^2 + \ldots..., \quad s\in\mathbb{R}; \vert x \vert<1. \] If we define \({s \choose k}\), the generalized binomial coefficient, by \[ {s \choose k}=\frac{s(s-1)\cdots(s-k+1)}{k!}, \] then we have \[ (1+x)^s= \sum_{k=0}^{\infty} {s \choose k} x^k, \quad s\in\mathbb{R}; \vert x \vert<1. \] If we let \(s=-r\), then we see that the above yields \[ (1-x)^{-r}= 1 + r x + \frac{(r+1)r}{2!}x^2 + \ldots...= \sum_{k=0}^\infty {r+k-1 \choose k} x^k, \quad r\in\mathbb{R}; \vert x \vert<1. \] This implies that if we define \(p_k\) as \[ p_k = {r+k-1 \choose k} \left(\frac{1}{1+\beta}\right)^r \left(\frac{\beta}{1+\beta}\right)^k, \quad k=0,1,\ldots \] for \(r>0\) and \(\beta\geq0\), then it defines a valid pmf. Such defined distribution is called the negative binomial distribution with parameters \((r,\beta)\) with \(r>0\) and \(\beta\geq 0\). Moreover, the binomial series also implies that the pgf of this distribution is given by \[ \begin{aligned} P_N(z) &= (1-\beta(z-1))^{-r}, \quad \vert z \vert < 1+\frac{1}{\beta}, \beta\geq0. \end{aligned} \] The above implies that the mgf is given by \[ \begin{aligned} M_N(t) &= (1-\beta(e^t-1))^{-r}, \quad t < \log\left(1+\frac{1}{\beta}\right), \beta\geq0. \end{aligned} \] We derive its moments using Theorem 3.1 as follows: \[\begin{eqnarray*} \mathrm{E}[N]&=&M'(0)= \left. r\beta e^t (1-\beta(e^t-1))^{-r-1}\right\vert_{t=0}=r\beta;\\ \mathrm{E}[N^2]&=&M''(0)= \left.\left[ r\beta e^t (1-\beta(e^t-1))^{-r-1} + r(r+1)\beta^2 e^{2t} (1-\beta(e^t-1))^{-r-2}\right]\right\vert_{t=0}\\ &=&r\beta(1+\beta)+r^2\beta^2;\\ \hbox{and }\mathrm{Var}[N]&=&\mathrm{E}{[N^2]}-(\mathrm{E}[{N}])^2=r\beta(1+\beta)+r^2\beta^2-r^2\beta^2=r\beta(1+\beta) \end{eqnarray*}\] We note that when \(\beta>0\), we have \(\mathrm{Var}[N] >\mathrm{E}[N]\). In other words, this distribution is overdispersedThe presence of greater variability (statistical dispersion) in a data set than would be expected based on a given statistical model (relative to the Poisson); similarly, when \(q>0\) the binomial distribution is said to be underdispersedThere was less variation in the data than predicted (relative to the Poisson).

Finally, we observe that the Poisson distribution also emerges as a limit of negative binomial distributions. Towards establishing this, let \(\beta_r\) be such that as \(r\) approaches infinity \(r\beta_r\) approaches \(\lambda>0\). Then we see that the mgfs of negative binomial distributions with parameters \((r,\beta_r)\) satisfies \[ \lim_{r\rightarrow 0} (1-\beta_r(e^t-1))^{-r}=\exp\{\lambda(e^t-1)\}, \] with the right hand side of the above equation being the mgf of the Poisson distribution with parameter \(\lambda\).5

Show Quiz Solution

3.3 The (a, b, 0) Class

In this section, you learn how to:

- Define the (a,b,0) class of frequency distributions

- Discuss the importance of the recursive relationship underpinning this class of distributions

- Identify conditions under which this general class reduces to each of the binomial, Poisson, and negative binomial distributions

In the previous section we studied three distributions, namely the binomial, the Poisson and the negative binomial distributions. In the case of the Poisson, to derive its mean we used the the fact that \[ kp_k=\lambda p_{k-1}, \quad k\geq 1, \] which can be expressed equivalently as \[ \frac{p_k}{p_{k-1}}=\frac{\lambda}{k}, \quad k\geq 1. \] Interestingly, we can similarly show that for the binomial distribution \[ \frac{p_k}{p_{k-1}}=\frac{-q}{1-q}+\left(\frac{(m+1)q}{1-q}\right)\frac{1}{k}, \quad k=1,\ldots,m, \] and that for the negative binomial distribution \[ \frac{p_k}{p_{k-1}}=\frac{\beta}{1+\beta}+\left(\frac{(r-1)\beta}{1+\beta}\right)\frac{1}{k}, \quad k\geq 1. \] The above relationships are all of the form \[\begin{equation} \frac{p_k}{p_{k-1}}=a+\frac{b}{k}, \quad k\geq 1; \tag{3.1} \end{equation}\] this raises the question if there are any other distributions which satisfy this seemingly general recurrence relation. Note that the ratio on the left, the ratio of two probabilities, is non-negative.

Show A Snippet of Theory

From the above development we see that not only does the recurrence (3.1) tie these three distributions together, but also it characterizes them. For this reason these three distributions are collectively referred to in the actuarial literature as (a,b,0) class of distributions, with \(0\) referring to the starting point of the recurrence. Note that the value of \(p_0\) is implied by \((a,b)\) since the probabilities have to sum to one. Of course, (3.1) as a recurrence relation for \(p_k\) makes the computation of the pmf efficient by removing redundancies. Later, we will see that it does so even in the case of compound distributions with the frequency distribution belonging to the \((a,b,0)\) class - this fact is the more important motivating reason to study these three distributions from this viewpoint.

Example 3.3.1. A discrete probability distribution has the following properties \[ \begin{aligned} p_k&=c\left( 1+\frac{2}{k}\right) p_{k-1} \:\:\: k=1,2,3,\ldots\\ p_1&= \frac{9}{256} \end{aligned} \] Determine the expected value of this discrete random variable.

Show Example Solution

Show Quiz Solution

3.4 Estimating Frequency Distributions

In this section, you learn how to:

- Define a likelihood for a sample of observations from a discrete distribution

- Define the maximum likelihood estimator for a random sample of observations from a discrete distribution

- Calculate the maximum likelihood estimator for the binomial, Poisson, and negative binomial distributions

3.4.1 Parameter Estimation

In Section 3.2 we introduced three distributions of importance in modeling various types of count data arising from insurance. Let us now suppose that we have a set of count data to which we wish to fit a distribution, and that we have determined that one of these \((a,b,0)\) distributions is more appropriate than the others. Since each one of these forms a class of distributions if we allow its parameter(s) to take any permissible value, there remains the task of determining the best value of the parameter(s) for the data at hand. This is a statistical point estimation problem, and in parametric inference problems the statistical inference paradigm of maximum likelihood usually yields efficient estimators. In this section we describe this paradigm and derive the maximum likelihood estimators.

Let us suppose that we observe the independent and identically distributed, iid, random variables \(X_1,X_2,\ldots,X_n\) from a distribution with pmf \(p_\theta\), where \(\theta\) is a vector of parameters and an unknown value in the parameter space \(\Theta\subseteq \mathbb{R}^d\). For example, in the case of the Poisson distribution, there is a single parameter so that \(d=1\) and

\[

p_\theta(x)=e^{-\theta}\frac{\theta^x}{x!}, \quad x=0,1,\ldots,

\]

with \(\theta > 0\). In the case of the binomial distribution we have

\[

p_\theta(x)= {m \choose x} q^x(1-q)^{m-x}, \quad x=0,1,\ldots,m.

\]

For some applications, we can view \(m\) as a parameter and so take \(d=2\) so that \(\theta=(m,q)\in \{0,1,2,\ldots\}\times[0,1]\).

Let us suppose that the observations are \(x_1,\ldots,x_n\), observed values of the random sample \(X_1,X_2,\ldots,X_n\) presented earlier. In this case, the probability of observing this sample from \(p_\theta\) equals \[ \prod_{i=1}^n p_\theta(x_i). \] The above, denoted by \(L(\theta)\), viewed as a function of \(\theta\), is called the likelihood. Note that we suppressed its dependence on the data, to emphasize that we are viewing it as a function of the parameter vector. For example, in the case of the Poisson distribution we have \[ L(\lambda)=e^{-n\lambda} \lambda^{\sum_{i=1}^n x_i} \left(\prod_{i=1}^n x_i!\right)^{-1}. \] In the case of the binomial distribution we have \[ L(m,q)=\left(\prod_{i=1}^n {m \choose x_i}\right) q^{\sum_{i=1}^n x_i} (1-q)^{nm-\sum_{i=1}^n x_i} . \] The maximum likelihood estimator (mle)The possible value of the parameter for which the chance of observing the data largest for \(\theta\) is any maximizer of the likelihood; in a sense the mle chooses the set of parameter values that best explains the observed observations. Appendix Section 17.2.2 reviews the foundations of maximum likelihood estimation with more mathematical details in Appendix Chapter 19.

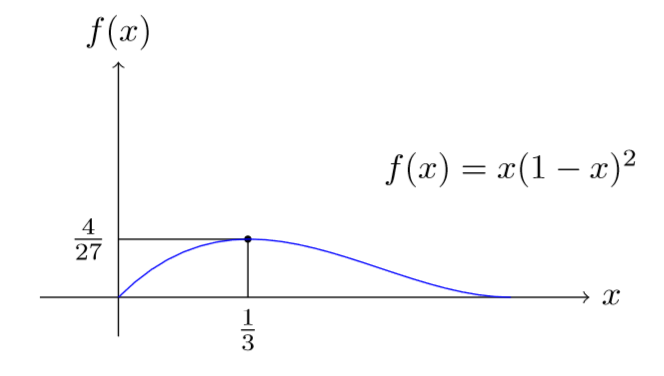

Special Case: Three Bernoulli Outcomes. To illustrate, consider a sample of size \(n=3\) from a Bernoulli distribution (binomial with \(m=1\)) with values \(0,1,0\). The likelihood in this case is easily checked to equal \[ L(q)=q(1-q)^2, \] and the plot of the likelihood is given in Figure 3.1. As shown in the plot, the maximum value of the likelihood equals \(4/27\) and is attained at \(q=1/3\), and hence the maximum likelihood estimate for \(q\) is \(1/3\) for the given sample. In this case one can resort to algebra to show that \[ q(1-q)^2=\left(q-\frac{1}{3}\right)^2\left(q-\frac{4}{3}\right)+\frac{4}{27}, \] and conclude that the maximum equals \(4/27\), and is attained at \(q=1/3\) (using the fact that the first term is non-positive in the interval \([0,1]\)).

But as is apparent, this way of deriving the mle using algebra does not generalize. In general, one resorts to calculus to derive the mle - note that for some likelihoods one may have to resort to other optimization methods, especially when the likelihood has many local extremaThe largest and smallest value of the function within a given range. It is customary to equivalently maximize the logarithm of the likelihood6 \(L(\cdot)\), denoted by \(l(\cdot)\), and look at the set of zeros of its first derivative7 \(l'(\cdot)\). In the case of the above likelihood, \(l(q)=\log(q)+2\log(1-q)\), and \[ l'(q)=\frac{\rm d}{{\rm d}q}l(q)=\frac{1}{q}-\frac{2}{1-q}. \] The unique zero of \(l'(\cdot)\) equals \(1/3\), and since \(l''(\cdot)\) is negative, we have \(1/3\) is the unique maximizer of the likelihood and hence its maximum likelihood estimate.

Figure 3.1: Likelihood of a \((0,1,0)\) \(3\)-sample from Bernoulli

3.4.2 Frequency Distributions MLE

In the following, we derive the maximum likelihood estimator, mle, for the three members of the \((a,b,0)\) class. We begin by summarizing the discussion above. In the setting of observing iid, independent and identically distributed, random variables \(X_1,X_2,\ldots,X_n\) from a distribution with pmf \(p_\theta\), where \(\theta\) takes an unknown value in \(\Theta\subseteq \mathbb{R}^d\), the likelihood \(L(\cdot)\), a function on \(\Theta\) is defined as \[ L(\theta)=\prod_{i=1}^n p_\theta(x_i), \] where \(x_1,\ldots,x_n\) are the observed values. The mle of \(\theta\), denoted as \(\hat{\theta}_{\rm MLE}\), is a function which maps the observations to an element of the set of maximizers of \(L(\cdot)\), namely \[ \{\theta \vert L(\theta)=\max_{\eta\in\Theta}L(\eta)\}. \] Note the above set is a function of the observations, even though this dependence is not made explicit. In the case of the three distributions that we study, and quite generally, the above set is a singleton with probability tending to one (with increasing sample size). In other words, for many commonly used distributions and when the sample size is large, the likelihood estimate is uniquely defined with high probability.

In the following, we assume that we have observed \(n\) iid random variables \(X_1,X_2,\ldots,X_n\) from the distribution under consideration, even though the parametric value is unknown. Also, \(x_1,x_2,\ldots,x_n\) will denote the observed values. We note that in the case of count data, and data from discrete distributions in general, the likelihood can alternately be represented as \[ L(\theta)=\prod_{k\geq 0} \left(p_\theta(k)\right)^{m_k}, \] where \(m_k\) is the number of observations equal to \(k\). Mathematically, we have \[ m_k= \left\vert \{i\vert x_i=k, 1\leq i \leq n\} \right\vert=\sum_{i= 1}^n I(x_i=k), \quad k\geq 0. \] Note that this transformation retains all of the data, compiling it in a streamlined manner. For large \(n\) it leads to compression of the data in the sense of sufficiency. Below, we present expressions for the mle in terms of \(\{m_k\}_{k\geq 1}\) as well.

Special Case: Poisson Distribution. In this case, as noted above, the likelihood is given by

\[

L(\lambda)=\left(\prod_{i=1}^n x_i!\right)^{-1}e^{-n\lambda}\lambda^{\sum_{i=1}^n x_i} .

\]

Taking logarithms, the log-likelihood is

\[

l(\lambda)= -\sum_{i=1}^n \log(x_i!) -n\lambda +\log(\lambda) \cdot \sum_{i=1}^n x_i .

\]

Taking a derivative, we have

\[

l'(\lambda)= -n +\frac{1}{\lambda}\sum_{i=1}^n x_i.

\]

In evaluating \(l''(\lambda)\), when \(\sum_{i=1}^n x_i>0\), \(l''< 0\). Consequently, the maximum is attained at the sample mean, \(\overline{x}\), presented below. When \(\sum_{i=1}^n x_i=0\), the likelihood is a decreasing function and hence the maximum is attained at the least possible parameter value; this results in the maximum likelihood estimate being zero. Hence, we have

\[

\overline{x} = \hat{\lambda}_{\rm MLE} = \frac{1}{n}\sum_{i=1}^n x_i.

\]

Note that the sample mean can be computed also as

\[

\overline{x} = \frac{1}{n} \sum_{k\geq 1} k \cdot m_k ~.

\]

It is noteworthy that in the case of the Poisson, the exact distribution of \(\hat{\lambda}_{\rm MLE}\) is available in closed form - it is a scaled Poisson - when the underlying distribution is a Poisson. This is so as the sum of independent Poisson random variables is a Poisson as well. Of course, for large sample size one can use the ordinary Central Limit Theorem (CLT)In some situations, when independent random variables are added, their properly normalized sum tends toward a normal distribution even if the original variables themselves are not normally distributed. to derive a normal approximation. Note that the latter approximation holds even if the underlying distribution is any distribution with a finite second moment.

Special Case: Binomial Distribution with known \(m\). Unlike the case of the Poisson distribution, the parameter space in the case of the binomial is \(2\)-dimensional. Hence the optimization problem is a bit more challenging. We first discuss the case where \(m\) is taken to be known - this is not a realistic assumption in insurance applications but is appropriate in circumstances where we are observing \(m\) iid binary outcomes with unknown probabilities.

We begin by observing that the likelihood is given by \[ L(m,q)= \left(\prod_{i=1}^n {m \choose x_i}\right) q^{\sum_{i=1}^n x_i} (1-q)^{nm-\sum_{i=1}^n x_i} . \] Taking logarithms, the log-likelihood is \[ \begin{array}{ll} l(m,q) &= \sum_{i=1}^n \log\left({m \choose x_i}\right) + \left({\sum_{i=1}^n x_i}\right)\log(q) \\ & \ \ \ + \left({nm-\sum_{i=1}^n x_i}\right)\log(1-q) \\ &= \sum_{i=1}^n \log\left({m \choose x_i}\right) + n \overline{x}\log(q) + n\left({m- \overline{x}}\right)\log(1-q) , \end{array} \] where \(\overline{x} = n^{-1} \sum_{i=1}^n x_i\). Since we have assumed that \(m\) is known, maximizing \(l(m,q)\) with respect to \(q\) involves taking the first differential and equating to zero: \[ \frac{{\rm d}l(m,q)}{{\rm d}q}=\frac{n\overline{x}}{q}-\frac{n\left({m- \overline{x}}\right)}{1-q}=0, \] which implies that \[ \hat{q}_{MLE}=\frac{\overline{x}}{m}. \]

Special Case: Binomial Distribution with unknown \(m\). Note that since \(m\) takes only non-negative integer values, we cannot use multivariate calculus to find the optimal values. Nevertheless, we can use single variable calculus to show that \[\begin{equation} \hat{q}_{MLE}\times \hat{m}_{MLE} = \overline{x}. \tag{3.2} \end{equation}\]

Show A Verification of Equation (3.2)

With equation (3.2), the above reduces the task to the search for \(\hat{m}_{\rm MLE}\), which is a maximizer of \[\begin{equation} L\left(m,\frac{\overline{x}}{m} \right). \tag{3.3} \end{equation}\] Note the likelihood would be zero for values of \(m\) smaller than \(\max\limits_{1\leq i \leq n}x_i\), and hence \(\hat{m}_{\rm MLE}\geq \max_{1\leq i \leq n}x_i\).

Technical Note on the Poisson Approximation to the Binomial

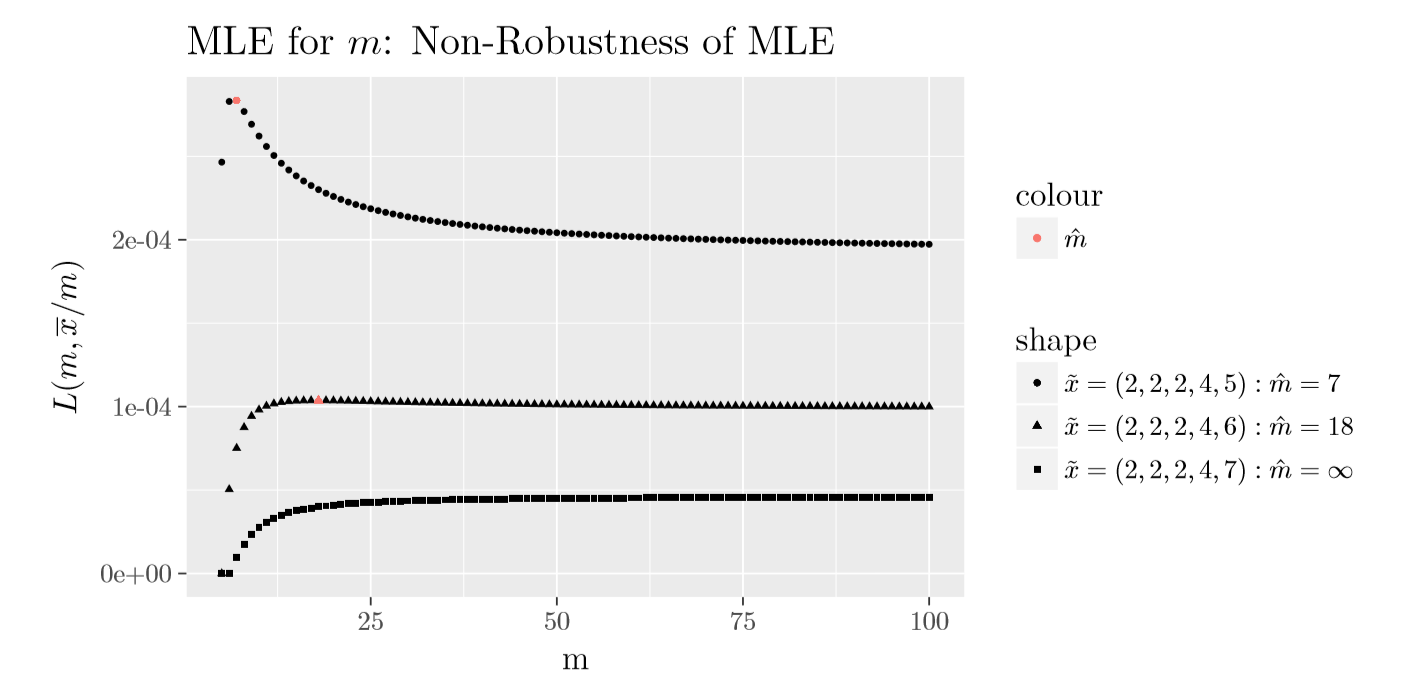

In Figure 3.2 below we display the plot of \(L\left(m,\overline{x}/m\right)\) for three different samples of size \(5\); they differ only in the value of the sample maximum. The first sample of \((2,2,2,4,5)\) has the ratio of sample mean to sample variance greater than \(1\) (\(1.875\)), the second sample of \((2,2,2,4,6)\) has the ratio equal to \(1.25\) which is closer to \(1\), and the third sample of \((2,2,2,4,7)\) has the ratio less than \(1\) (\(0.885\)). For these three samples, as shown in Figure 3.2, \(\hat{m}_{\rm MLE}\) equals \(7\), \(18\) and \(\infty\), respectively. Note that the limiting value of \(L\left(m,\overline{x}/m\right)\) as \(m\) approaches infinity equals \[\begin{equation} \left(\prod_{i=1}^n x_i! \right)^{-1} \exp\left(-n \overline{x}~\right) \left(~\overline{x}~\right)^{n\overline{x}}. \tag{3.4} \end{equation}\] Also, note that Figure 3.2 shows that the mle of \(m\) is non-robustResistant to errors in the results, produced by deviations from assumptions, i.e. changes in a small proportion of the data set can cause large changes in the estimator.

The above discussion suggests the following simple algorithm:

- Step 1. If the sample mean is less than or equal to the sample variance, then set \(\hat{m}_{MLE}=\infty\). The mle suggested distribution is a Poisson distribution with \(\hat{\lambda}=\overline{x}\).

- Step 2. If the sample mean is greater than the sample variance, then compute \(L(m,\overline{x}/m)\) for \(m\) values greater than or equal to the sample maximum until \(L(m,\overline{x}/m)\) is close to the value of the Poisson likelihood given in (3.4). The value of \(m\) that corresponds to the maximum value of \(L(m,\overline{x}/m)\) among those computed equals \(\hat{m}_{MLE}\).

We note that if the underlying distribution is the binomial distribution with parameters \((m,q)\) (with \(q>0\)) then \(\hat{m}_{MLE}\) equals \(m\) for large sample sizes. Also, \(\hat{q}_{MLE}\) will have an asymptotically normal distribution and converge with probability one to \(q\).

Figure 3.2: Plot of \(L(m,\bar{x}/m)\) for a Binomial Distribution

Special Case: Negative Binomial Distribution. The case of the negative binomial distribution is similar to that of the binomial distribution in the sense that we have two parameters and the mles are not available in closed form. A difference between them is that unlike the binomial parameter \(m\) which takes positive integer values, the parameter \(r\) of the negative binomial can assume any positive real value. This makes the optimization problem a tad more complex. We begin by observing that the likelihood can be expressed in the following form:

\[

L(r,\beta)=\left(\prod_{i=1}^n {r+x_i-1 \choose x_i}{r+x_i-1 \choose x_i}\right) (1+\beta)^{-n(r+\overline{x})} \beta^{n\overline{x}}.

\]

The above implies that log-likelihood is given by

\[

l(r,\beta)=\sum_{i=1}^n \log{r+x_i-1 \choose x_i} -n(r+\overline{x}) \log(1+\beta) +n\overline{x}\log\beta,

\]

and hence

\[

\frac{\delta}{\delta\beta} l(r,\beta) = -\frac{n(r+\overline{x})}{1+\beta} + \frac{n\overline{x}}{\beta}.

\]

Equating the above to zero, we get

\[

\hat{r}_{MLE}\times \hat{\beta}_{MLE} = \overline{x}.

\]

The above reduces the two dimensional optimization problem to a one-dimensional problem - we need to maximize

\[

l(r,\overline{x}/r)=\sum_{i=1}^n \log{r+x_i-1 \choose x_i} -n(r+\overline{x}) \log(1+\overline{x}/r) +n\overline{x}\log(\overline{x}/r),

\]

with respect to \(r\), with the maximizing \(r\) being its mle and \(\hat{\beta}_{MLE}=\overline{x}/\hat{r}_{MLE}\). In Levin, Reeds, et al. (1977) it is shown that if the sample variance is greater than the sample mean then there exists a unique \(r>0\) that maximizes \(l(r,\overline{x}/r)\) and hence a unique

mle for \(r\) and \(\beta\). Also, they show that if \(\hat{\sigma}^2\leq \overline{x}\), then the negative binomial likelihood will be dominated by the Poisson likelihood with \(\hat{\lambda}=\overline{x}\). In other words, a Poisson distribution offers a better fit to the data. The guarantee in the case of \(\hat{\sigma}^2>\hat{\mu}\) permits us to use some algorithm to maximize \(l(r,\overline{x}/r)\). Towards an alternate method of computing the likelihood, we note that

\[

\begin{array}{ll}

l(r,\overline{x}/r)&=\sum_{i=1}^n \sum_{j=1}^{x_i}\log(r-1+j) - \sum_{i=1}^n\log(x_i!) \\

& \ \ \ - n(r+\overline{x}) \log(r+\overline{x}) + nr\log(r) + n\overline{x}\log(\overline{x}),

\end{array}

\]

which yields

\[

\left(\frac{1}{n}\right)\frac{\delta}{\delta r}l(r,\overline{x}/r)=\frac{1}{n}\sum_{i=1}^n \sum_{j=1}^{x_i}\frac{1}{r-1+j} - \log(r+\overline{x}) + \log(r).

\]

We note that, in the above expressions for the terms involving a double summation, the inner sum equals zero if \(x_i=0\). The maximum likelihood estimate for \(r\) is a root of the last expression and we can use a root finding algorithm to compute it. Also, we have

\[

\left(\frac{1}{n}\right)\frac{\delta^2}{\delta r^2}l(r,\overline{x}/r)=\frac{\overline{x}}{r(r+\overline{x})}-\frac{1}{n}\sum_{i=1}^n \sum_{j=1}^{x_i}\frac{1}{(r-1+j)^2}.

\]

A simple but quickly converging iterative root finding algorithm is the Newton’s methodA root-finding algorithm which produces successively better approximations to the roots of a real-valued function, which incidentally the Babylonians are believed to have used for computing square roots. Under this method, an initial approximation is selected for the root and new approximations for the root are successively generated until convergence. Applying the Newton’s method to our problem results in the following algorithm:

Step i. Choose an approximate solution, say \(r_0\). Set \(k\) to \(0\).

Step ii. Define \(r_{k+1}\) as

\[

r_{k+1}= r_k - \frac{\frac{1}{n}\sum_{i=1}^n \sum_{j=1}^{x_i}\frac{1}{r_k-1+j} - \log(r_k+\overline{x}) + \log(r_k)}{\frac{\overline{x}}{r_k(r_k+\overline{x})}-\frac{1}{n}\sum_{i=1}^n \sum_{j=1}^{x_i}\frac{1}{(r_k-1+j)^2}}

\]

Step iii. If \(r_{k+1}\sim r_k\), then report \(r_{k+1}\) as maximum likelihood estimate; else increment \(k\) by \(1\) and repeat Step ii.

For example, we simulated a \(5\) observation sample of \(41, 49, 40, 27, 23\) from the negative binomial with parameters \(r=10\) and \(\beta=5\). Choosing the starting value of \(r\) such that \[ r\beta=\hat{\mu} \quad \hbox{and} \quad r\beta(1+\beta)=\hat{\sigma}^2 \] where \(\hat{\mu}\) represents the estimated mean and \(\hat{\sigma}^2\) is the estimated variance. This leads to the starting value for \(r\) of \(23.14286\). The iterates of \(r\) from the Newton’s method are \[ 21.39627, 21.60287, 21.60647, 21.60647; \] the rapid convergence seen above is typical of the Newton’s method. Hence in this example, \(\hat{r}_{MLE} \sim 21.60647\) and \(\hat{\beta}_{MLE} = 1.66616\).

Show R Implementation of Newtons Method - Negative Binomial MLE for r

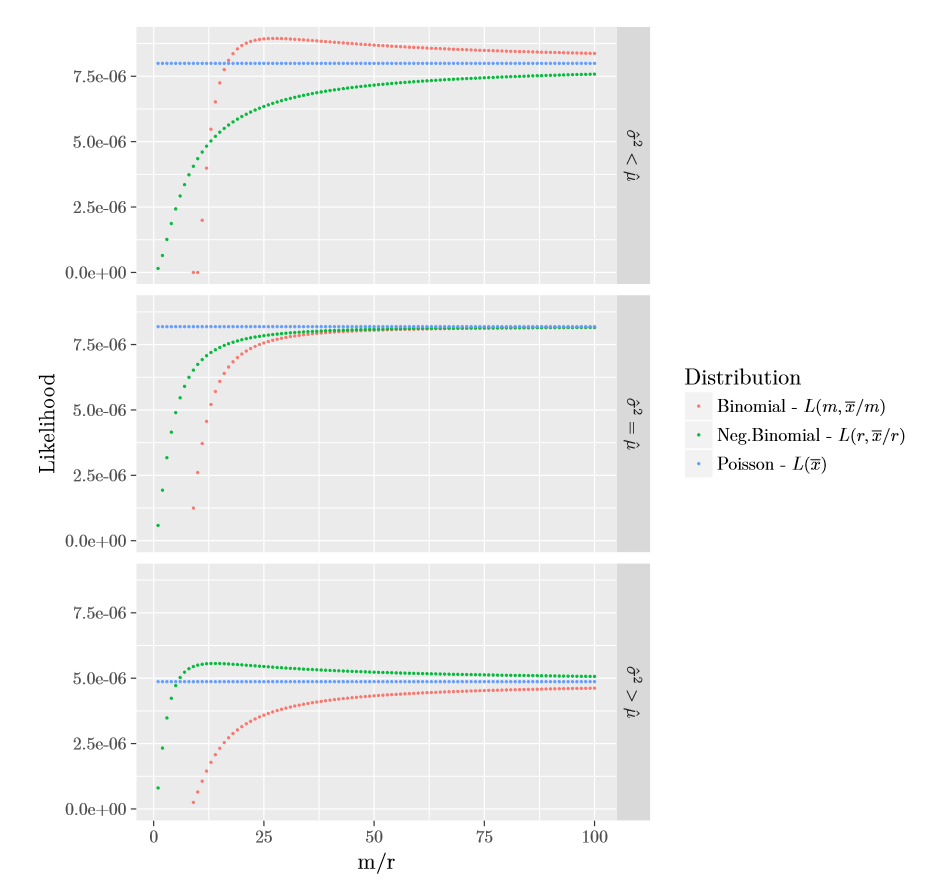

To summarize our discussion of MLEMaximum likelihood estimate for the \((a,b,0)\) class of distributions, in Figure 3.3 below we plot the maximum value of the Poisson likelihood, \(L(m,\overline{x}/m)\) for the binomial, and \(L(r,\overline{x}/r)\) for the negative binomial, for the three samples of size \(5\) given in Table 3.1. The data was constructed to cover the three orderings of the sample mean and variance. As shown in the Figure 3.3, and supported by theory, if \(\hat{\mu}<\hat{\sigma}^2\) then the negative binomial results in a higher maximum likelihood value; if \(\hat{\mu}=\hat{\sigma}^2\) the Poisson has the highest likelihood value; and finally in the case that \(\hat{\mu}>\hat{\sigma}^2\) the binomial gives a better fit than the others. So before fitting a frequency data with an \((a,b,0)\) distribution, it is best to start with examining the ordering of \(\hat{\mu}\) and \(\hat{\sigma}^2\). We again emphasize that the Poisson is on the boundary of the negative binomial and binomial distributions. So in the case that \(\hat{\mu}\geq\hat{\sigma}^2\) (\(\hat{\mu}\leq\hat{\sigma}^2\), resp.) the Poisson yields a better fit than the negative binomial (binomial, resp.), which is indicated by \(\hat{r}=\infty\) (\(\hat{m}=\infty\), respectively).

Table 3.1. Three Samples of Size 5

\[ \small{ \begin{array}{c|c|c} \hline \text{Data} & \text{Mean }(\hat{\mu}) & \text{Variance }(\hat{\sigma}^2) \\ \hline (2,3,6,8,9) & 5.60 & 7.44 \\ (2,5,6,8,9) & 6 & 6\\ (4,7,8,10,11) & 8 & 6\\\hline \end{array} } \]

Figure 3.3: Plot of \((a,b,0)\) Partially Maximized Likelihoods

Show Quiz Solution

3.5 Other Frequency Distributions

In this section, you learn how to:

- Define the (a,b,1) class of frequency distributions and discuss the importance of the recursive relationship underpinning this class of distributions

- Interpret zero truncated and modified versions of the binomial, Poisson, and negative binomial distributions

- Compute probabilities using the recursive relationship

In the previous sections, we discussed three distributions with supports contained in the set of non-negative integers, which well cater to many insurance applications. Moreover, typically by allowing the parameters to be a function of known (to the insurer) explanatory variablesIn regression, the explanatory variable is the one that is supposed to “explain” the other. such as age, sex, geographic location (territory), and so forth, these distributions allow us to explain claim probabilities in terms of these variables. The field of statistical study that studies such models is known as regression analysisA set of statistical processes for estimating the relationships among variables - it is an important topic of actuarial interest that we will not pursue in this book; see Frees (2009).

There are clearly infinitely many other count distributions, and more importantly the above distributions by themselves do not cater to all practical needs. In particular, one feature of some insurance data is that the proportion of zero counts can be out of place with the proportion of other counts to be explainable by the above distributions. In the following we modify the above distributions to allow for arbitrary probability for zero count irrespective of the assignment of relative probabilities for the other counts. Another feature of a data set which is naturally comprised of homogeneousUnits of exposure that face approximately the same expected frequency and severity of loss. subsets is that while the above distributions may provide good fits to each subset, they may fail to do so to the whole data set. Later we naturally extend the \((a,b,0)\) distributions to be able to cater to, in particular, such data sets.

3.5.1 Zero Truncation or Modification

Let us suppose that we are looking at auto insurance policies which appear in a database of auto claims made in a certain period. If one is to study the number of claims that these policies have made during this period, then clearly the distribution has to assign a probability of zero to the count variable assuming the value zero. In other words, by restricting attention to count data from policies in the database of claims, we have in a sense zero-truncated the count data of all policies. In personal lines (like auto), policyholders may not want to report that first claim because of fear that it may increase future insurance rates - this behavior inflates the proportion of zero counts. Examples such as the latter modify the proportion of zero counts. Interestingly, natural modifications of the three distributions considered above are able to provide good fits to zero-modified/truncated data sets arising in insurance.

As presented below, we modify the probability assigned to zero count by the \((a,b,0)\) class while maintaining the relative probabilities assigned to non-zero counts - zero modification. Note that since the \((a,b,0)\) class of distributions satisfies the recurrence (3.1), maintaining relative probabilities of non-zero counts implies that recurrence (3.1) is satisfied for \(k\geq 2\). This leads to the definition of the following class of distributions.

Definition. A count distribution is a member of the \((a, b, 1)\) class if for some constants \(a\) and \(b\) the probabilities \(p_k\) satisfy \[\begin{equation} \frac{p_k}{p_{k-1}}=a+\frac{b}{k},\quad k\geq 2. \tag{3.5} \end{equation}\] Note that since the recursion starts with \(p_1\), and not \(p_0\), we refer to this super-class of \((a,b,0)\) distributions by (a,b,1)A count distribution with probabilities satisfying p_k/p_{k-1}=a+b/k, for some some constants a and b and k>=2. To understand this class, recall that each valid pair of values for \(a\) and \(b\) of the \((a,b,0)\) class corresponds to a unique vector of probabilities \(\{p_k\}_{k\geq 0}\). If we now look at the probability vector \(\{\tilde{p}_k\}_{k\geq 0}\) given by \[ \tilde{p}_k= \frac{1-\tilde{p}_0}{1-p_0}\cdot p_k, \quad k\geq 1, \] where \(\tilde{p}_0\in[0,1)\) is arbitrarily chosen, then since the relative probabilities for positive values according to \(\{p_k\}_{k\geq 0}\) and \(\{\tilde{p}_k\}_{k\geq 0}\) are the same, we have \(\{\tilde{p}_k\}_{k\geq 0}\) satisfies recurrence (3.5). This, in particular, shows that the class of \((a,b,1)\) distributions is strictly wider than that of \((a,b,0)\).

In the above, we started with a pair of values for \(a\) and \(b\) that led to a valid \((a,b,0)\) distribution, and then looked at the \((a,b,1)\) distributions that corresponded to this \((a,b,0)\) distribution. We now argue that the \((a,b,1)\) class allows for a larger set of permissible distributions for \(a\) and \(b\) than the \((a,b,0)\) class. Recall from Section 3.3 that in the case of \(a<0\) we did not use the fact that the recurrence (3.1) started at \(k=1\), and hence the set of pairs \((a,b)\) with \(a<0\) that are permissible for the \((a,b,0)\) class is identical to those that are permissible for the \((a,b,1)\) class. The same conclusion is easily drawn for pairs with \(a=0\). In the case that \(a>0\), instead of the constraint \(a+b>0\) for the \((a,b,0)\) class we now have the weaker constraint of \(a+b/2>0\) for the \((a,b,1)\) class. With the parametrization \(b=(r-1)a\) as used in Section 3.3, instead of \(r>0\) we now have the weaker constraint of \(r>-1\). In particular, we see that while zero modifying a \((a,b,0)\) distribution leads to a distribution in the \((a,b,1)\) class, the conclusion does not hold in the other direction.

Zero modification of a count distribution \(F\) such that it assigns zero probability to zero count is called a zero truncationZero modification of a count distribution such that it assigns zero probability to zero count of \(F\). Hence, the zero truncated version of probabilities \(\{p_k\}_{k\geq 0}\) is given by \[ \tilde{p}_k=\begin{cases} 0, & k=0;\\ \frac{p_k}{1-p_0}, & k\geq 1. \end{cases} \]

In particular, we have that a zero modification of a count distribution \(\{p_k^T\}_{k\geq 0}\), denoted by \(\{p^M_k\}_{k\geq 0}\), can be written as a convex combinationA linear combination of points where all coefficients are non-negative and sum to 1 of the degenerate distributionA deterministic distribution and takes only a single value at \(0\) and the zero truncation of \(\{p_k\}_{k\geq 0}\), denoted by \(\{p^T_k\}_{k\geq 0}\). That is we have \[ p^M_k= p^M_0 \cdot \delta_{0}(k) + (1-p^M_0) \cdot p^T_k, \quad k\geq 0. \]

Example 3.5.1. Zero Truncated/Modified Poisson. Consider a Poisson distribution with parameter \(\lambda=2\). Calculate \(p_k, k=0,1,2,3\), for the usual (unmodified), truncated and a modified version with \((p_0^M=0.6)\).

Show Example Solution

Show Quiz Solution

3.6 Mixture Distributions

In this section, you learn how to:

- Define a mixture distribution when the mixing component is based on a finite number of sub-groups

- Compute mixture distribution probabilities from mixing proportions and knowledge of the distribution of each subgroup

- Define a mixture distribution when the mixing component is continuous

In many applications, the underlying population consists of naturally defined sub-groups with some homogeneity within each sub-group. In such cases it is convenient to model the individual sub-groups, and in a ground-up manner model the whole population. As we shall see below, beyond the aesthetic appeal of the approach, it also extends the range of applications that can be catered to by standard parametric distributions.

Let \(k\) denote the number of defined sub-groups in a population, and let \(F_i\) denote the distribution of an observation drawn from the \(i\)-th subgroup. If we let \(\alpha_i\) denote the proportion of the population in the \(i\)-th subgroup, with \(\sum_{i=1}^k \alpha_i=1\), then the distribution of a randomly chosen observation from the population, denoted by \(F\), is given by \[\begin{equation} F(x)=\sum_{i=1}^k \alpha_i \cdot F_i(x). \tag{3.6} \end{equation}\] The above expression can be seen as a direct application of the Law of Total Probability. As an example, consider a population of drivers split broadly into two sub-groups, those with at most five years of driving experience and those with more than five years experience. Let \(\alpha\) denote the proportion of drivers with less than \(5\) years experience, and \(F_{\leq 5}\) and \(F_{> 5}\) denote the distribution of the count of claims in a year for a driver in each group, respectively. Then the distribution of claim count of a randomly selected driver is given by \[ \alpha\cdot F_{\leq 5}(x) + (1-\alpha)F_{> 5}(x). \]

An alternate definition of a mixture distributionThe probability distribution of a random variable that is derived from a collection of other random variables as follows: first, a random variable is selected by chance from the collection according to given probabilities of selection, and then the value of the selected random variable is realized is as follows. Let \(N_i\) be a random variable with distribution \(F_i\), \(i=1,\ldots, k\). Let \(I\) be a random variable taking values \(1,2,\ldots,k\) with probabilities \(\alpha_1,\ldots,\alpha_k\), respectively. Then the random variable \(N_I\) has a distribution given by equation (3.6)8.

In (3.6) we see that the distribution function is a convex combination of the component distribution functions. This result easily extends to the probability mass function, the survival function, the raw moments, and the expectation as these are all linear mappings of the distribution function. We note that this is not true for central moments like the variance, and conditional measures like the hazard rate function. In the case of variance it is easily seen as \[\begin{equation} \mathrm{Var}{[N_I]}=\mathrm{E}[{\mathrm{Var}[{N_I\vert I}]]} + \mathrm{Var}[{\mathrm{E}[{N_I|I}}]]=\sum_{i=1}^k \alpha_i \mathrm{Var}[{N_i}] + \mathrm{Var}[{\mathrm{E}[{N_I|I}}]] . \tag{3.7} \end{equation}\] Appendix Chapter 18 provides additional background about this important expression.

Example 3.6.1. Actuarial Exam Question. In a certain town the number of common colds an individual will get in a year follows a Poisson distribution that depends on the individual’s age and smoking status. The distribution of the population and the mean number of colds are as follows:

Table 3.2. The Distribution of the Population and the Mean Number of Colds

\[ \small{ \begin{array}{l|c|c} \hline & \text{Proportion of population} & \text{Mean number of colds}\\\hline \text{Children} & 0.3 & 3\\ \text{Adult Non-Smokers} & 0.6 & 1\\ \text{Adult Smokers} & 0.1 & 4\\\hline \end{array} } \]

- Calculate the probability that a randomly drawn person has 3 common colds in a year.

- Calculate the conditional probability that a person with exactly 3 common colds in a year is an adult smoker.

Show Example Solution

In the above example, the number of subgroups \(k\) was equal to three. In general, \(k\) can be any natural number, but when \(k\) is large it is parsimonious from a modeling point of view to take the following infinitely many subgroup approach. To motivate this approach, let the \(i\)-th subgroup be such that its component distribution \(F_i\) is given by \(G_{\tilde{\theta_i}}\), where \(G_\cdot\) is a parametric family of distributions with parameter space \(\Theta\subseteq \mathbb{R}^d\). With this assumption, the distribution function \(F\) of a randomly drawn observation from the population is given by

\[

F(x)=\sum_{i=1}^k \alpha_i G_{\tilde{\theta_i}}(x),\quad \forall x\in\mathbb{R} ,

\]

similar to equation (3.6). Alternately, it can be written as

\[

F(x)=\mathrm{E}[{G_{\tilde{\vartheta}}(x)}],\quad \forall x\in\mathbb{R},

\]

where \(\tilde{\vartheta}\) takes values \(\tilde{\theta_i}\) with probability \(\alpha_i\), for \(i=1,\ldots,k\). The above makes it clear that when \(k\) is large, one could model the above by treating \(\tilde{\vartheta}\) as continuous random variable.

To illustrate this approach, suppose we have a population of drivers with the distribution of claims for an individual driver being distributed as a Poisson. Each person has their own (personal) expected number of claims \(\lambda\) - smaller values for good drivers, and larger values for others. There is a distribution of \(\lambda\) in the population; a popular and convenient choice for modeling this distribution is a gamma distribution with parameters \((\alpha, \theta)\) (the gamma distribution will be introduced formally in Section 4.2.1). With these specifications it turns out that the resulting distribution of \(N\), the claims of a randomly chosen driver, is a negative binomial with parameters \((r=\alpha,\beta=\theta)\). This can be shown in many ways, but a straightforward argument is as follows: \[ \begin{array}{ll} \Pr(N=k)&= \int_0^\infty \frac{e^{-\lambda}\lambda^k}{k!} \frac{\lambda^{\alpha-1}e^{-\lambda/\theta}}{\Gamma{(\alpha)}\theta^{\alpha}} d\lambda = \frac{1}{k!\Gamma(\alpha)\theta^\alpha}\int_0^\infty \lambda^{\alpha+k-1}e^{-\lambda(1+1/\theta)}~d\lambda \\ &=\frac{\Gamma{(\alpha+k)}}{k!\Gamma(\alpha)\theta^\alpha(1+1/\theta)^{\alpha+k}} \\ &={\alpha+k-1 \choose k}\left(\frac{1}{1+\theta}\right)^\alpha\left(\frac{\theta}{1+\theta}\right)^k, \quad k=0,1,\ldots \end{array} \] Note that the above derivation implicitly uses the following: \[ f_{N\vert\Lambda=\lambda}(N=k)=\frac{e^{-\lambda}\lambda^k}{k!}, \quad k\geq 0; \quad \hbox{and} \quad f_{\Lambda}(\lambda)= \frac{\lambda^{\alpha-1}e^{-\lambda/\theta}}{\Gamma{(\alpha)}\theta^{\alpha}}, \quad \lambda>0. \]

By considering mixtures of a parametric class of distributions, we increase the richness of the class. This expansion of distributions results in the mixture class being able to cater well to more applications that the parametric class we started with. Mixture modeling is an important modeling technique in insurance applications and later chapters will cover more aspects of this modeling technique.

Example 3.6.2. Suppose that \(N|\Lambda \sim\) Poisson\((\Lambda)\) and that \(\Lambda \sim\) gamma with mean of 1 and variance of 2. Determine the probability that \(N=1\).

Show Example Solution

Show Quiz Solution

3.7 Real Data Example

In this section, you learn how to:

- Compare a fitted distribution to empirical data to assess the adequacy of the fit

In the above, we have discussed three basic frequency distributions, along with their extensions through zero modification/truncation, and by looking at mixtures of these distributions. Nevertheless, these classes remain parametric and hence a small subset of the class of all possible frequency distributions (that is, the set of distributions on non-negative integers). Hence, even though we have discussed methods for estimating the unknown parameters, the fitted distribution need not be a good representation of the underlying distribution if the latter is far from the class of distribution used for modeling.

While the class of distributions considered above is relatively narrow, via a real example, we present some evidence that they serve insurance purposes quite well.

In \(1993\), a portfolio of \(n=7,483\) automobile insurance policies from a major Singaporean insurance company had the distribution of auto accidents per policyholder as given in Table 3.3.

Table 3.3. Singaporean Automobile Accident Data

\[ \small{ \begin{array}{l|c|c|c|c|c|c} \hline \text{Count }(k) & 0 & 1 & 2 & 3 & 4 & \text{Total}\\ \hline \text{No. of Policies with }k\text{ accidents }(m_k) & 6,996 & 455 & 28 & 4 & 0 & 7,483\\ \hline \end{array} } \] If we a fit a Poisson distribution, then the mle for \(\lambda\), the Poisson mean, is the sample mean which is given by \[ \overline{N} = \frac{0\cdot 6996 + 1 \cdot 455 + 2 \cdot 28 + 3 \cdot 4 + 4 \cdot 0}{7483} = 0.06989. \] Now if we use Poisson (\(\hat{\lambda}_{MLE}\)) as the fitted distribution, then a tabular comparison of the fitted counts and observed counts is given by Table 3.4 below, where \(\hat{p}_k\) represents the estimated probabilities under the fitted Poisson distribution.

Table 3.4. Comparison of Observed to Fitted Counts: Singaporean Auto Data

\[ \small{ \begin{array}{c|r|r} \hline \text{Count} & \text{Observed} & \text{Fitted Counts}\\ (k) & (m_k) & \text{Using Poisson }(n\hat{p}_k)\\ \hline 0 & 6,996 & 6,977.86 \\ 1 & 455 & 487.70 \\ 2 & 28 & 17.04 \\ 3 & 4 & 0.40 \\ \geq 4 & 0 & 0.01\\ \hline \text{Total} & 7,483 & 7,483.00\\ \hline \end{array} } \] Notice that the fit seems quite reasonable from the above tabular comparison, suggesting that the Poisson distribution is a good model of the underlying distribution. Nevertheless, it is worth pointing out that such a tabular comparison falls short of a statistical test of the hypothesis that the underlying distribution is indeed Poisson. In Section 6.1.2, we present Pearson’s chi-square statistic as a goodness-of-fit statistical measure for this purpose.

3.8 Exercises

Theoretical Exercises

Exercise 3.1. Derive an expression for \(p_N(\cdot)\) in terms of \(F_N(\cdot)\) and \(S_N(\cdot)\).

Exercise 3.2. A measure of center of location must be equi-variant with respect to shifts, or location transformations. In other words, if \(N_1\) and \(N_2\) are two random variables such that \(N_1+c\) has the same distribution as \(N_2\), for some constant \(c\), then the difference between the measures of the center of location of \(N_2\) and \(N_1\) must equal \(c\). Show that the mean satisfies this property.

Exercise 3.3. Measures of dispersion should be invariant with respect to shifts and scale equi-variant. Show that standard deviation satisfies these properties by doing the following:

- Show that for a random variable \(N\), its standard deviation equals that of \(N+c\), for any constant \(c\).

- Show that for a random variable \(N\), its standard deviation equals \(1/c\) times that of \(cN\), for any positive constant \(c\).

Exercise 3.4. Let \(N\) be a random variable with probability mass function given by \[ p_N(k)= \begin{cases} \left(\frac{6}{\pi^2}\right)\left(\frac{1}{k^{2}}\right), & k\geq 1;\\ 0, &\hbox{otherwise}. \end{cases} \] Show that the mean of \(N\) is \(\infty\).

Exercise 3.5. Let \(N\) be a random variable with a finite second moment. Show that the function \(\psi(\cdot)\) defined by \[ \psi(x)=\mathrm{E}{(N-x)^2}. \quad x\in\mathbb{R} \] is minimized at \(\mu_N\) without using calculus. Also, give a proof of this fact using derivatives. Conclude that the minimum value equals the variance of \(N\).

Exercise 3.6. Derive the first two central moments of the \((a,b,0)\) distributions using the methods mentioned below:

- For the binomial distribution, derive the moments using only its pmf, then its mgf, and then its pgf.

- For the Poisson distribution, derive the moments using only its mgf.

- For the negative binomial distribution, derive the moments using only its pmf, and then its pgf.

Exercise 3.7. Let \(N_1\) and \(N_2\) be two independent Poisson random variables with means \(\lambda_1\) and \(\lambda_2\), respectively. Identify the conditional distribution of \(N_1\) given \(N_1+N_2\).

Exercise 3.8. (Non-Uniqueness of the MLE) Consider the following parametric family of densities indexed by the parameter \(p\) taking values in \([0,1]\): \[ f_p(x)=p\cdot\phi(x+2)+(1-p)\cdot\phi(x-2), \quad x\in\mathbb{R}, \] where \(\phi(\cdot)\) represents the standard normal density.

- Show that for all \(p\in[0,1]\), \(f_p(\cdot)\) above is a valid density function.

- Find an expression in \(p\) for the mean and the variance of \(f_p(\cdot)\).

- Let us consider a sample of size one consisting of \(x\). Show that when \(x\) equals \(0\), the set of maximum likelihood estimates for \(p\) equals \([0,1]\); also show that the mle is unique otherwise.

Exercise 3.9. Graph the region of the plane corresponding to values of \((a,b)\) that give rise to valid \((a,b,0)\) distributions. Do the same for \((a,b,1)\) distributions.

Exercise 3.10. (Computational Complexity) For the \((a,b,0)\) class of distributions, count the number of basic mathematical operations (addition, subtraction, multiplication, division) needed to compute the \(n\) probabilities \(p_0\ldots p_{n-1}\) using the recurrence relationship. For the negative binomial distribution with non-integer \(r\), count the number of such operations. What do you observe?

Exercise 3.11. Using the development of Section 3.3 rigorously show that not only does the recurrence (3.1) tie the binomial, the Poisson and the negative binomial distributions together, but that it also characterizes them.

Exercise 3.12. Actuarial Exam Question. You are given:

- \(p_k\) denotes the probability that the number of claims equals \(k\) for \(k=0,1,2,\ldots\)

- \(\frac{p_n}{p_m}=\frac{m!}{n!}, m\ge 0, n\ge 0\)

Using the corresponding zero-modified claim count distribution with \(p_0^M=0.1\), calculate \(p_1^M\).

Exercises with a Practical Focus

Exercise 3.13. Singaporean Automobile Accident. In this exercise, we replicate and extend the real-data example introduced in Section 3.7 using R.

- a. From the package

CASdatasets, retrieve the datasgautonbin order to work with the variableClm_Countwhich is a count of claims. Refer to Section 22.6 for a description of this package. Verify that the mean claim count is 0. - b. Compute the fitted Poisson distribution and reproduce Table 3.2.

| Claim Count | Empirical Percentile | Poisson Perc |

|---|---|---|

| 6996 | 0.9349 | 0.9325 |

| 455 | 0.9957 | 0.9977 |

| 28 | 0.9995 | 0.9999 |

| 4 | 1.0000 | 1.0000 |

- c. Compute the maximum likelihood estimates for the negative binomial distribution. One way to do this is to create a negative logarithmic likelihood function and use the

Rfunctionoptimfor minimization. Use the resulting maximum likelihood estimates to create a fitted distribution and augment the Table in part (b) with this alternative distribution.

In part (c), you learn that the more complex negative binomial distribution produces roughly the same fits as the simpler Poisson distribution. As a result of this analysis, an analyst would typically prefer the simpler Poisson distribution.

Solutions for Exercise 3.13

Exercise 3.14. Corporate Travel. This exercise is based on the data set introduced in Exercise 1.1 where now the focus is on frequency modeling. For corporate travel, the number of claims are sufficient that a separate frequency model could be considered. For the frequency of claims, there are 2107 claims over the 2006-2021 period that amounts to 131.69 per year. One might assume that annual claims can be fit using a single distribution to the entire period, such as a Poisson or a negative binomial. Another option is to fit a distribution starting in years 2009, where this is an increase in the amount of claims from prior years. A third option is to omit experience from underwriting year 2019 and on where the number of claims fluctuated dramatically, in part due to the Covid epidemic. In this exercise, we pursue the first option.

- a. Fit a Poisson distribution and a negative binomial distribution to all claims.

- b. Fit a negative binomial distribution to all claims using the strategy introduced in part (c) of Exercise 3.13.

- c. To check your work, use the

fitdistfunction from the packagefitdistrplus, with the negative binomial (nbinom) option. - d. Use the

ecdffunction inRto produce empirical cumulative probabilities. Produce a table that compares the empirical percentiles to those under the Poisson and negative binomial.

From part (d), you learn that both fitted distributions did well and neither outperformed the other.

Solutions for Exercise 3.14

3.9 Further Resources and Contributors

Appendix Chapter 17 gives a general introduction to maximum likelihood theory regarding estimation of parameters from a parametric family. Appendix Chapter 19 gives more specific examples and expands some of the concepts.

If you would like additional practice with R coding, please visit our companion LDA Short Course. In particular, see the Frequency Modeling Chapter.

Contributors

- N.D. Shyamalkumar, The University of Iowa, and Krupa Viswanathan, Temple University, are the principal authors of the initial version and also the second edition of this chapter. Email: shyamal-kumar@uiowa.edu for chapter comments and suggested improvements.

- Chapter reviewers include: Chunsheng Ban, Paul Johnson, Hirokazu (Iwahiro) Iwasawa, Dalia Khalil, Tatjana Miljkovic, Rajesh Sahasrabuddhe, and Michelle Xia.

For convenience, we have indexed \(\mu_N\) with the random variable \(N\) instead of \(F_N\) or \(p_N\), even though it is solely a function of the distribution of the random variable. \[ \mu_N=\sum_{k=0}^\infty k~p_N(k). \] We note that \(\mu_N\) is the expected value of the random variable \(N\), i.e. \(\mu_N=\mathrm{E}[N]\). This leads to a general class of measures, the momentsThe rth moment of a list is the average value of the random variable raised to the rth power of the distribution; the \(r\)-th raw moment of \(N\), for \(r> 0\), is defined as \(\mathrm{E}{[N^r]}\) and denoted by \(\mu_N'(r)\). We remark that the prime \(\prime\) here does not denote differentiation. Rather, it is commonly used notation to distinguish a raw from a central moment, as will be introduced in Section 4.1.1. For \(r>0\), we have

\[ \mu_N'(r)= \mathrm{E}{[N^r]}= \sum_{k=0}^\infty k^r~p_N(k). \] We note that \(\mu_N'(\cdot)\) is a well-defined non-decreasing function taking values in \([0,\infty]\), as \(\Pr(N\in\{0, 1, \ldots \})=1\); also, note that \(\mu_N=\mu_N'(1)\). In the following, when we refer to a moment it will be implicit that it is finite unless mentioned otherwise.↩︎\(0^0 = 1\)↩︎

In the following we suppress the reference to \(N\) and denote the pmf by the sequence \(\{p_k\}_{k\geq 0}\), instead of the function \(p_N(\cdot)\).↩︎

For the theoretical basis underlying the above argument, see Billingsley (2008).↩︎

The set of maximizers of \(L(\cdot)\) are the same as the set of maximizers of any strictly increasing function of \(L(\cdot)\), and hence the same as those for \(l(\cdot)\).↩︎

A slight benefit of working with \(l(\cdot)\) is that constant terms in \(L(\cdot)\) do not appear in \(l'(\cdot)\) whereas they do in \(L'(\cdot)\).↩︎

This in particular lays out a way to simulate from a mixture distribution that makes use of efficient simulation schemes that may exist for the component distributions.↩︎